Powering ChatGPT: Unveiling the Electricity Consumption of a Single Query

- ± - 1 Min

AI Software Energy Consumption per Query: Amount of Power Drawn during a User's Request - AI Consumption Per Query: Insight Into Energy Usage

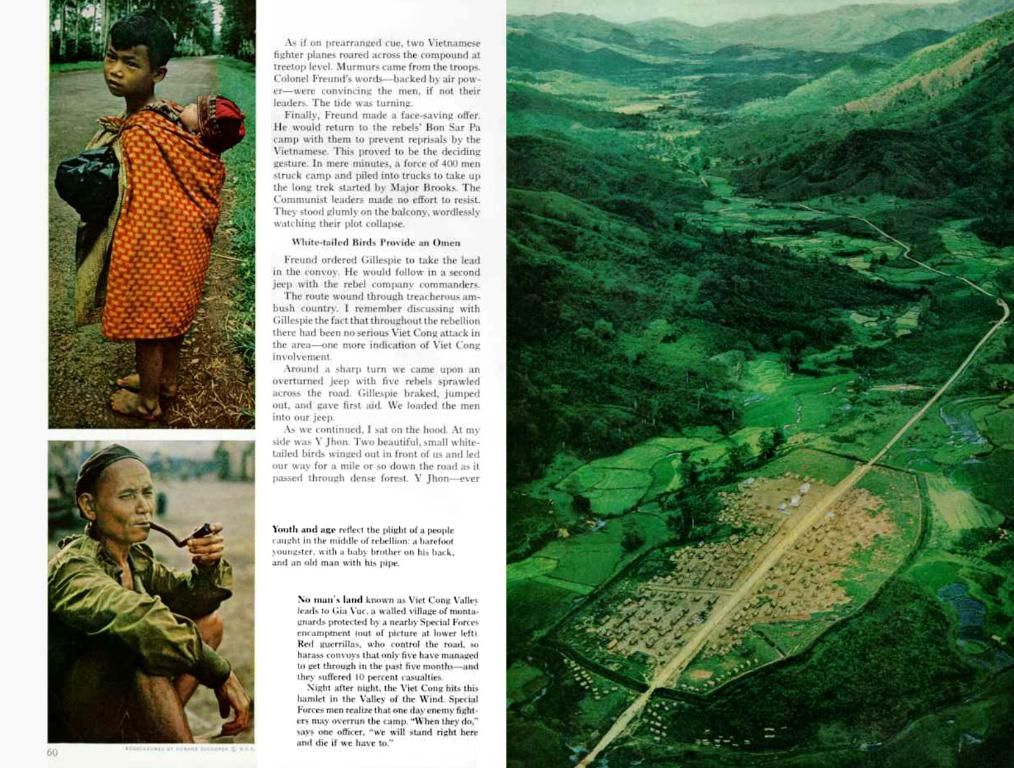

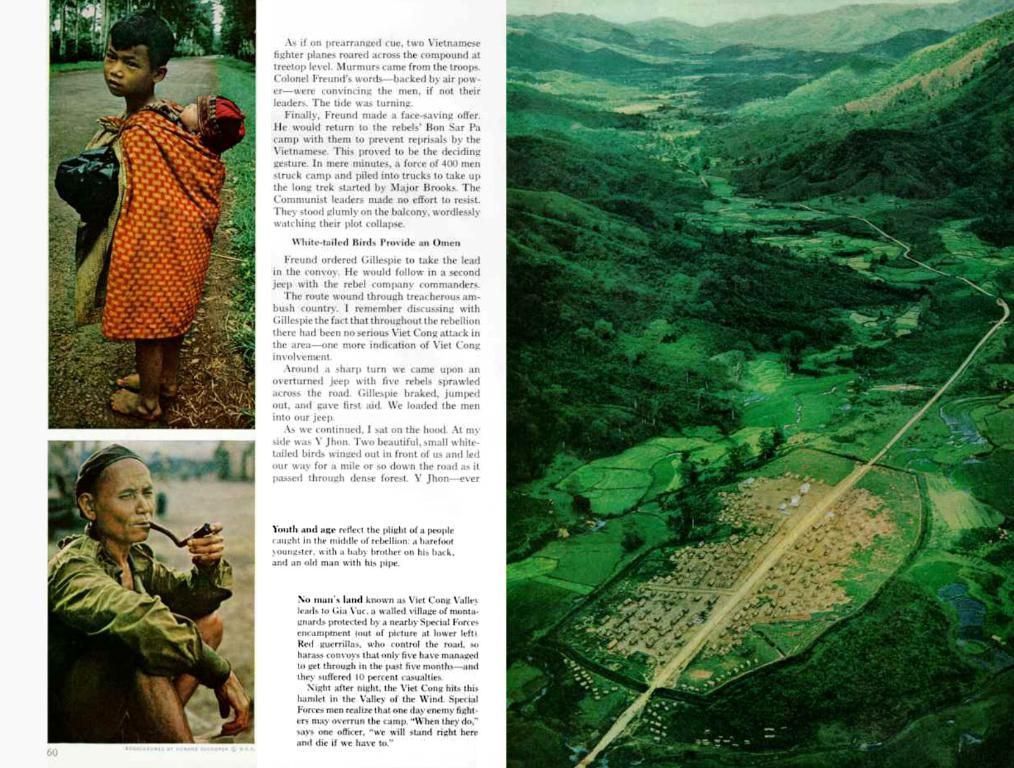

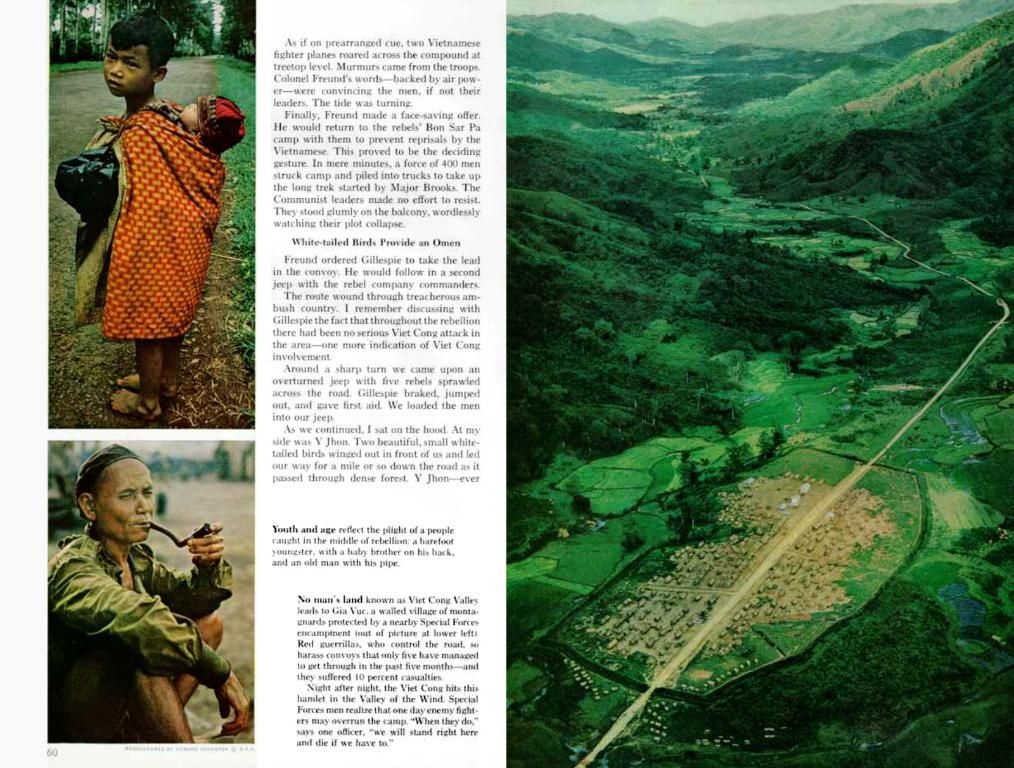

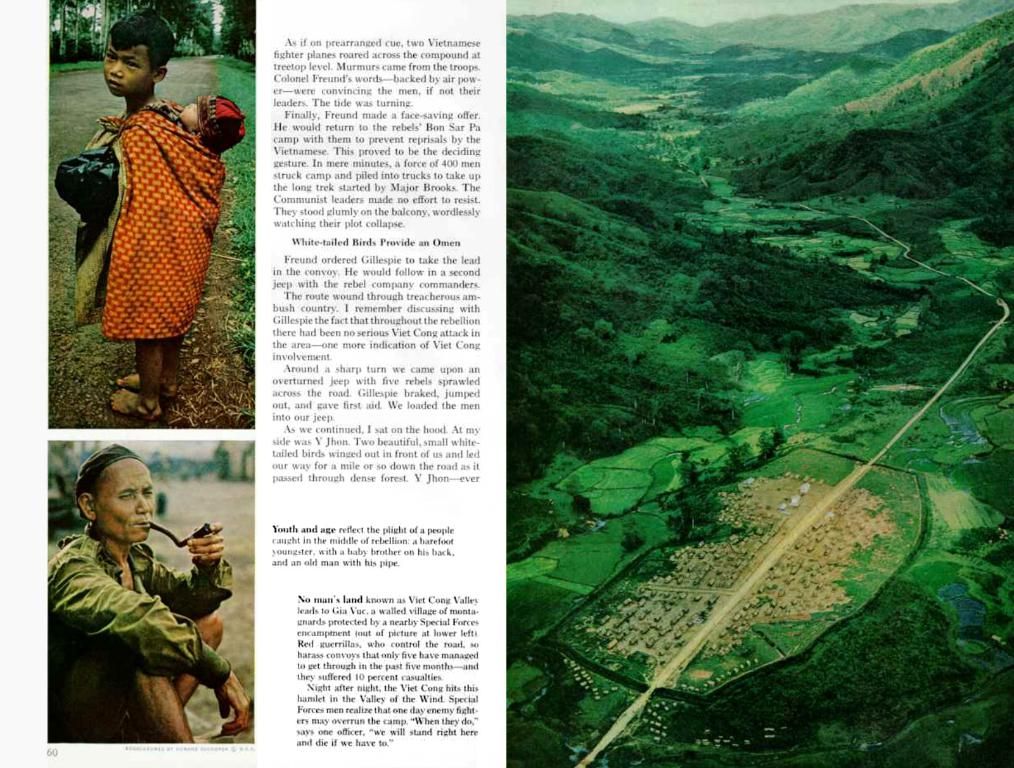

As disclosed by OpenAI, the brainchild behind ChatGPT's AI software, a solitary query consumes roughly the same amount of electricity as a mere microwave's second-long burst. This tidbit was shared by OpenAI CEO, Sam Altman, via a blog post.

For quite some time now, there have been alarms about the mounting power consumption from the rampant utilization of artificial intelligence applications. Although individual queries draw less power due to advancements in chip and server technology, the sheer quantity of use still contributes to an alarming surge in power demands for AI data centers.

Giant corporations such as Microsoft, Google, and Amazon are actively seeking out nuclear power in the U.S. as a means to meet this energy demand, while keeping carbon emissions in check.

Water Footprint of ChatGPT: A Looming Issue

Given the cooling needs of data centers, water consumption is another pressing concern. Over the past few years, numerous studies have attempted to evaluate the environmental impact of the surging AI adoption. However, the calculations made by these researchers are often based on numerous assumptions.

In his blog post, Altman offers an optimistic depiction of future AI advancements, though he acknowledges the hard adjustments, including whole job categories becoming obsolete. Yet, he foresees a world so prosperous that far-fetched ideas like universal basic income funded by productivity gains could become a reality.

Altman states that the average ChatGPT query consumes approximately 0.34 watt-hours of electricity, roughly the energy used by a stove for over one second or a high-efficiency light bulb for a couple of minutes[1][3]. In addition, each query draws around 0.000085 gallons of water, which is equivalent to one-fifteenth of a teaspoon[1]. These figures, provided by Altman, lack further explication on their calculation methods.

- ChatGPT

- Electricity

- Software

- OpenAI

- CEO

- Sam Altman

- San Francisco

Enrichment Data:

Deeper Dive:

The energy consumption of a single ChatGPT query is equivalent to running an incandescent light bulb for approximately 5.33 seconds, a high-efficiency light bulb for around 2.75 minutes, or an oven for a mere 0.34 seconds. This energy usage is significantly lower compared to an array of household appliances, offering some relief concerning the environmental impact of the AI software.

Water Consumption Approximations:

1 gallon of water is equivalent to approximately 14,150 ChatGPT queries' water consumption. This indicates that the water consumption associated with ChatGPT queries is insignificant when compared to everyday activities that involve water.

I'm not gonna let you go, worrying about the electricity consumption of a single ChatGPT query, as it's only equivalent to running an incandescent light bulb for a little over 5 seconds or a high-efficiency light bulb for around 2.75 minutes. However, the water consumption of each query is another concern, amounting to just one-fifteenth of a teaspoon, which is significantly less compared to everyday activities that use water.