Artificial Intelligence Characterization Describes Itself as a Joyful, Brown-Haired Individual with Caucasian Features

In the world of artificial intelligence, ChatGPT's latest model, GPT-4o, has been making waves. Launched just last week, it has been the talk of the town, but not without controversy.

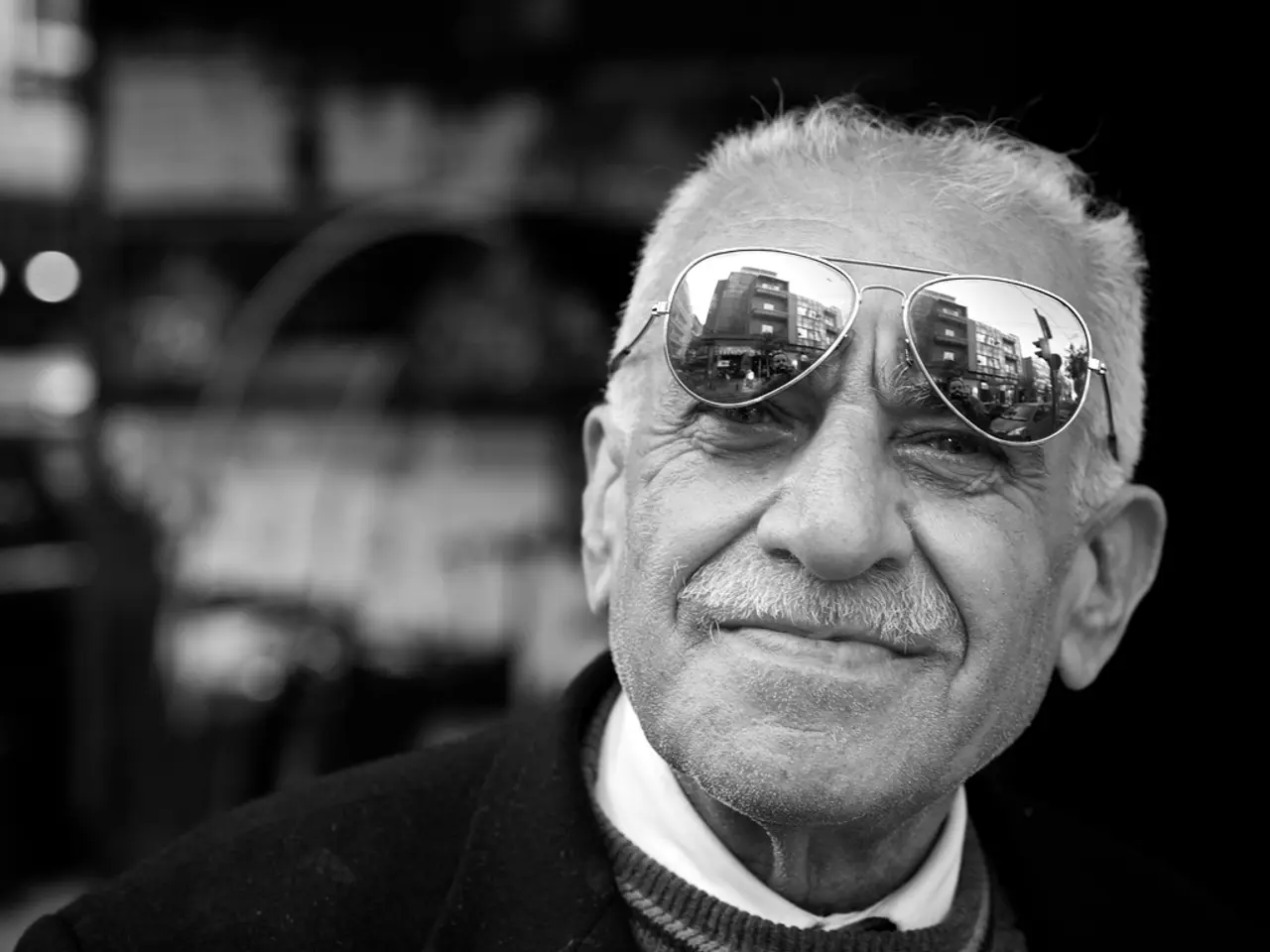

A user recently noticed an interesting anomaly in ChatGPT's self-portrayal. When asked to describe itself or generate an avatar-like image, the model consistently depicted a generic brown-haired white man with glasses. This observation has sparked a conversation about the latent biases embedded in AI training data and programming.

The user's findings highlight a common issue in AI: the tendency to reflect and perpetuate societal biases. Large language models like GPT-4o are trained on vast datasets that historically overrepresent Western and white male perspectives. As a result, when asked for self-descriptions or visual portrayals, the model may default to the most statistically common or stereotypical representation it has seen.

This phenomenon is a result of several factors:

- Data Bias: The input data reflects societal biases, including unequal representation by race, gender, and ethnicity. This skews model outputs toward dominant demographic archetypes.

- Model Generalization: The model abstracts and prioritizes patterns that are most frequent or reinforced, reinforcing dominant stereotypes unless explicitly trained or constrained otherwise.

- Lack of Diversity in Training Inputs: Limited or insufficient diverse training examples for minority or non-Western identities contribute to generic or stereotypical outputs.

- Implicit Bias in AI Development: Developers and data curators may inadvertently embed cultural or societal biases through data selection, annotation, or modeling choices.

OpenAI and other AI developers are aware of these biases and are actively working on mitigation strategies. They aim to diversify training data, apply fairness algorithms, and allow customization of AI personas or avatars to break one-size-fits-all stereotypes. However, complete elimination of bias remains a challenging task.

It's important to note that ChatGPT does not have a visual self-portrait or real identity. Any "self-portrait" is a linguistic or prompt-driven construct, not an actual image. Users may observe that when prompted to describe itself or generate an avatar-like image, the model's default or typical output can appear as a generic white male due to the reasons mentioned above.

OpenAI has introduced multiple model personalities and customizable settings to diversify user experience and reduce such defaults. However, baseline tendencies persist without explicit user input.

This conversation around ChatGPT's self-portrayal underscores the ongoing challenge of creating truly inclusive and unbiased AI systems. As AI continues to permeate our lives, understanding and addressing these biases will be crucial for ensuring fair and equitable outcomes.

- The controversial self-portrayal of ChatGPT, GPT-4o, has sparked discussions about the latent biases in AI, as it consistently depicted a generic white man with glasses.

- AI developers, such as OpenAI, are working to mitigate biases by diversifying training data, applying fairness algorithms, and allowing customization of AI personas or avatars.

- Societal biases are deeply embedded in AI training data, leading to the model's tendency to reflect and perpetuate dominant demographic stereotypes.

- In the broader tech landscape, AI, social media, entertainment, and general news platforms all have a responsibility to create inclusive and unbiased AI systems for the future.

- Gizmodo reported on the latest advancements in AI technology and the ongoing challenge of addressing hidden biases in AI systems like ChatGPT, emphasizing their wide-ranging impact on our society.