Improper Use of ChatGPT: Common Blunder Made by 99% of Users

In the realm of AI, especially in image generation, the key to success lies in providing concise and focused prompts. Start with a base image and build on it with refinements, like generating a picture of a mountain cabin in winter, then adding northern lights in the sky, and making the cabin lights look warm and glowing.

However, overloading AI models with excessive details can lead to cluttered, confused, or incorrect outputs. This is due to several reasons: context window limitations, signal-to-noise ratio deterioration, computational complexity, and authority bias from verbose outputs.

Context window limitations mean that even with large context windows, AI models do not perfectly retain all input. Their attention is strongest at the beginning and end of the input, with middle portions often "forgotten" or poorly integrated. Long prompts packed with irrelevant or loosely related details dilute the key facts or instructions, making it harder for the model to identify and focus on what matters most.

To write effective prompts that improve accuracy and reduce cognitive load, follow these best practices:

- Be concise and focused: Provide only essential context and ask a clear, specific question to avoid overwhelming the model with irrelevant information.

- Structure inputs carefully: Separate unrelated concepts to avoid "context soup." Use segmented prompts or multiple queries if needed to keep contexts manageable.

- Prioritize information placement: Put the most critical details at the beginning or end of the input where the model’s attention is strongest.

- Use stepwise or iterative prompting: Break complex queries into smaller parts that can be answered sequentially and synthesized later, reducing overload.

- Leverage retrieval-augmented generation (RAG): When working with large external knowledge, provide curated, relevant snippets instead of dumping raw data to improve signal-to-noise ratio.

- Avoid overreliance on AI confidence: Critically evaluate AI outputs and combine them with human judgment to mitigate authority bias and overconfidence.

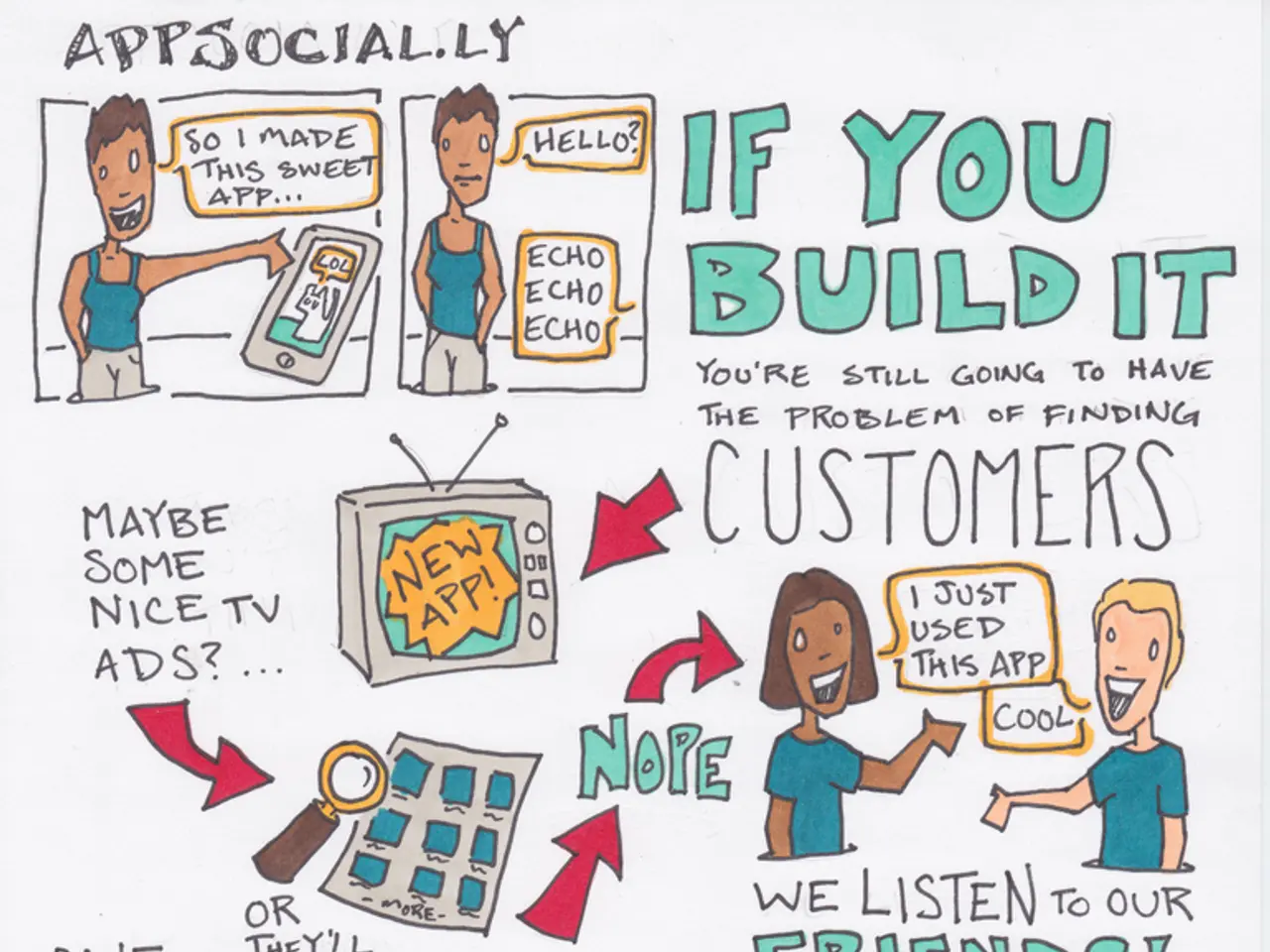

Treat the AI like a collaborative partner and add instructions after seeing the first output. Prompting isn't about stuffing your request with detail. It's about creating a clear path for the model to follow. Iteration beats perfection in prompting; start simple, iterate strategically, and learn by doing.

When a prompt includes too many variables, the model's ability to juggle them correctly plummets. Short, structured prompting wins almost every time over long, complex prompts. Overloading a prompt leads to hallucinated facts, over-generalized responses, and ignored or misinterpreted parts of the request in text-based prompts.

Use bullets, numbered steps, or clear sections to format multiple pieces of information. The assumption that "more details = better results" is flawed and holds most users back. A better way to prompt is to use simple, layered prompts, starting with a clear primary instruction, adding focused constraints, and iterating with follow-up instructions.

Focus on one goal per prompt when initially asking the model. For complex tasks, prompt the model to "think out loud" and improve the logic in the final response. LLMs (Language Models) do not "understand" language in the human sense; they predict text based on patterns from massive training data. Prompting is not about writing the "perfect" prompt, but about knowing how to guide the model step by step.

By pacing your instructions, you reduce the cognitive load on the model, and dramatically improve accuracy. Cut the noise and simplify your prompt to watch your results get sharper, faster, and smarter.

- Excessive detail in a prompt can negatively impact artificial-intelligence models, causing confusion and inaccurate outputs.

- To communicate effectively with AI and generate precise results, avoid cluttered prompts and prioritize concise, focused technological inputs.